Data Engineering on Google Cloud

The Data Engineering on Google Cloud course provides students with the skills needed to design and build data processing systems, analyze data, and implement machine learning using Google Cloud. The course focuses on structured, unstructured, and streaming data and requires basic knowledge of SQL, data modeling, ETL tasks, and programming languages such as Python. It is ideal for developers working in data processing, analytics, and machine learning. During the course, students will delve into topics including the role of a data engineer, BigQuery, data lakes, data warehouses, and collaborating with other data teams. Technologies that will be explored include Google Cloud, SQL, Python, BigQuery, data lakes, and data warehouses. Finally, the course helps prepare for the Google Professional Data Engineer Certification exam .

Course Objectives

Below is a summary of the main objectives of the Data Engineering on Google Cloud Course :

- Learn how to use Google Cloud to design and build data engineering solutions.

- Explore the advanced capabilities of BigQuery for analyzing large datasets.

- Apply data modeling techniques and ETL processes within the Google Cloud ecosystem.

- Develop machine learning skills with Google Cloud tools.

- Integrate data lake and data warehouse services for data storage and analysis on Google Cloud.

- Automate data pipelines using Google Cloud services like Dataflow and Pub/Sub.

- Secure and monitor data infrastructure on Google Cloud.

- Optimize data processing workflows for performance and cost efficiency.

Course Certification

This course helps you prepare to take the:

Google Cloud Certified Professional Data Engineer Exam;

Course Outline

Module 1: Introduction to Data Engineering

- Explore the role of a data engineer.

- Analyze data engineering challenges.

- Intro to BigQuery.

- Data Lakes and Data Warehouses.

- Demo: Federated Queries with BigQuery.

- Transactional Databases vs Data Warehouses.

- Website Demo: Finding PII in your dataset with DLP API.

- Partner effectively with other data teams.

- Manage data access and governance.

- Build production-ready pipelines.

- Review GCP customer case study.

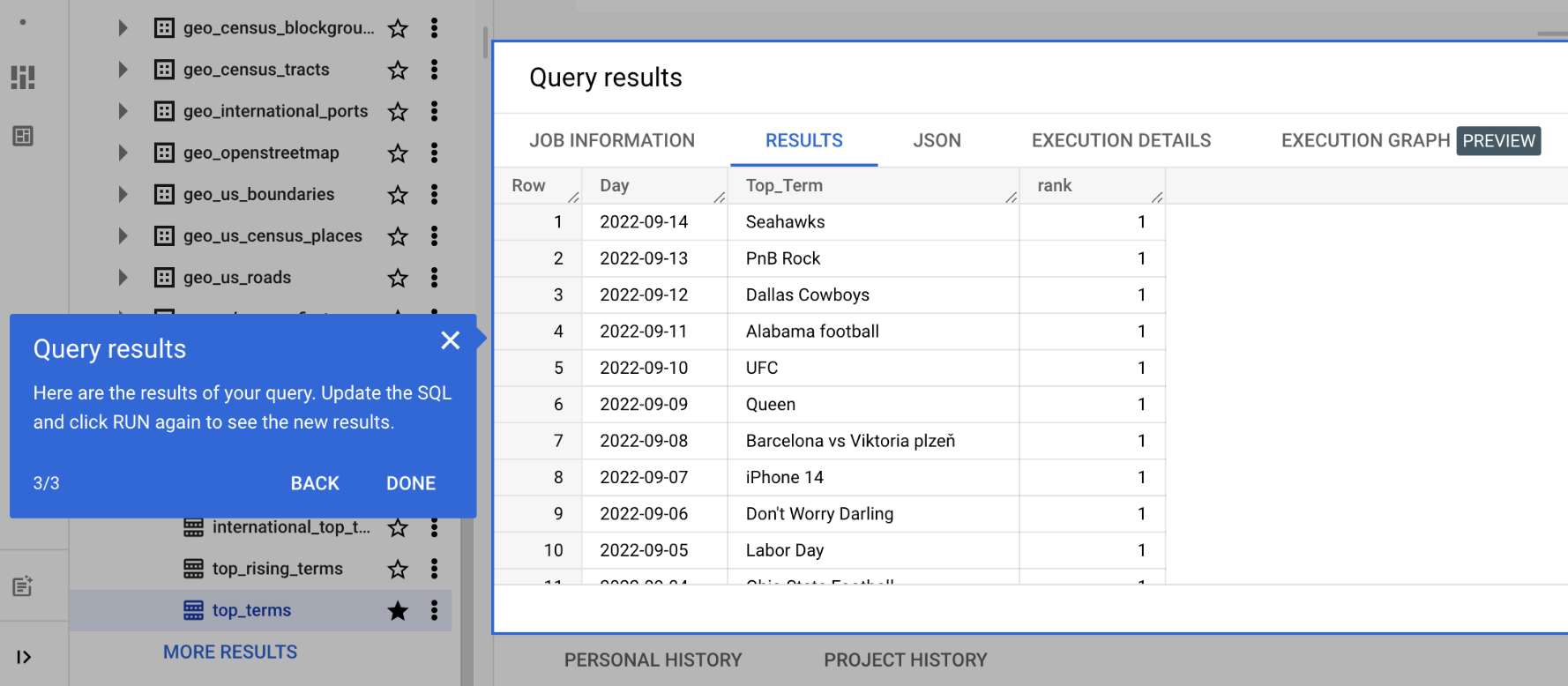

- Lab: Analyzing Data with BigQuery.

Module 2: Building a Data Lake

- Introduction to Data Lakes.

- Data Storage and ETL options on GCP.

- Building a Data Lake using Cloud Storage.

- Optional Demo: Optimizing cost with Google Cloud Storage classes and Cloud Functions.

- Securing Cloud Storage.

- Storing All Sorts of Data Types.

- Video Demo: Running federated queries on Parquet and ORC files in BigQuery.

- Cloud SQL as a relational Data Lake.

- Lab: Loading Taxi Data into Cloud SQL.

Module 3: Building a Data Warehouse

- The modern data warehouse.

- Intro to BigQuery.

- Demo: Query TB+ of data in seconds.

- Getting Started.

- Loading Data.

- Video Demo: Querying Cloud SQL from BigQuery.

- Lab: Loading Data into BigQuery.

- Exploring Schemas.

- Demo: Exploring BigQuery Public Datasets with SQL using INFORMATION_SCHEMA.

- Design Scheme.

- Nested and Repeated Fields.

- Demo: Nested and repeated fields in BigQuery.

- Lab: Working with JSON and Array data in BigQuery.

- Optimizing with Partitioning and Clustering.

- Demo: Partitioned and Clustered Tables in BigQuery.

- Preview: Transforming Batch and Streaming Data.

Module 4: Introduction to Building Batch Data Pipelines

- EL, ELT, ETL.

- Quality considerations.

- How to carry out operations in BigQuery.

- Demo: ELT to improve data quality in BigQuery.

- Shortcomings.

- ETL to solve data quality issues.

Module 5: Executing Spark on Cloud Dataproc

- The Hadoop ecosystem.

- Running Hadoop on Cloud Dataproc.

- GCS instead of HDFS.

- Optimizing Dataproc.

- Lab: Running Apache Spark jobs on Cloud Dataproc.

Module 6: Serverless Data Processing with Cloud Dataflow

- Cloud Dataflow.

- Why customers value Dataflow.

- Dataflow Pipelines.

- Lab: A Simple Dataflow Pipeline (Python/Java).

- Lab: MapReduce in Dataflow (Python/Java).

- Lab: Side Inputs (Python/Java).

- Dataflow Templates.

- SQL Dataflow.

Module 7: Manage Data Pipelines with Cloud Data Fusion and Cloud Composer

- Building Batch Data Pipelines visually with Cloud Data Fusion.

- Components.

- UI Overview.

- Building a Pipeline.

- Exploring Data using Wrangler.

- Lab: Building and executing a pipeline graph in Cloud Data Fusion.

- Orchestrating work between GCP services with Cloud Composer.

- Apache Airflow Environment.

- DAGs and Operators.

- Workflow Scheduling.

- Optional Long Demo: Event-triggered Loading of data with Cloud Composer, Cloud Functions, Cloud Storage, and BigQuery.

- Monitoring and Logging.

- Lab: An Introduction to Cloud Composer.

Module 8: Introduction to Processing Streaming Data

- Processing Streaming Data.

Module 9: Serverless Messaging with Cloud Pub/Sub

- Cloud Pub/Sub.

- Lab: Publish Streaming Data into Pub/Sub.

Module 10: Cloud Dataflow Streaming Features

- Cloud Dataflow Streaming Features.

- Lab: Streaming Data Pipelines.

Module 11: High-Throughput BigQuery and Bigtable Streaming Features

- BigQuery Streaming Features.

- Lab: Streaming Analytics and Dashboards.

- Cloud Bigtable.

- Lab: Streaming Data Pipelines into Bigtable.

Module 12: Advanced BigQuery Functionality and Performance

- Analytic Window Functions.

- Using With Clauses.

- GIS Functions.

- Demo: Mapping Fastest Growing Zip Codes with BigQuery GeoViz.

- Performance Considerations.

- Lab: Optimizing your BigQuery Queries for Performance.

- Optional Lab: Creating Date-Partitioned Tables in BigQuery.

Module 13: Introduction to Analytics and AI

- What is AI?.

- From Ad-hoc Data Analysis to Data Driven Decisions.

- Options for ML models on GCP.

Module 14: Prebuilt ML model APIs for Unstructured Data

- Unstructured Data is Hard.

- ML APIs for Enriching Data.

- Lab: Using the Natural Language API to Classify Unstructured Text.

Module 15: Big Data Analytics with Cloud AI Platform Notebooks

- What’s a Notebook.

- BigQuery Magic and Ties to Pandas.

- Lab: BigQuery in Jupyter Labs on AI Platform.

Module 16: Production ML Pipelines with Kubeflow

- Ways to do ML on GCP.

- Kubeflow.

- AI Hub.

- Lab: Running AI models on Kubeflow.

Module 17: Custom Model building with SQL in BigQuery ML

- BigQuery ML for Quick Model Building.

- Demo: Train a model with BigQuery ML to predict NYC taxi fares.

- Supported Models.

- Lab Option 1: Predict Bike Trip Duration with a Regression Model in BQML.

- Lab Option 2: Movie Recommendations in BigQuery ML.

- Module 18: Custom Model building with Cloud AutoML

- Why Auto ML?

- Auto ML Vision.

- Auto ML NLP.

- Auto ML Tables.

Course Mode

Instructor-Led Remote Live Classroom Training;

Trainers

Trainers are GCP Official Instructors and certified in other IT technologies, with years of hands-on experience in the industry and in Training.

Lab Topology

For all types of delivery, the Trainee can access real Cisco equipment and systems in our laboratories or directly at the Cisco data centers remotely 24 hours a day. Each participant has access to implement the various configurations thus having a practical and immediate feedback of the theoretical concepts.

Here are some Labs topologies available:

Course Details

Course Prerequisites

- Attendance at the Google Cloud Big Data and Machine Learning Fundamentals course is recommended .

Course Duration

Intensive duration 4 days

Course Frequency

Course Duration: 4 days (9.00 to 17.00) - Ask for other types of attendance.

Course Date

- Data Engineering on Google Cloud Course (Intensive Formula) – 07/04/2025 – 09:00 – 17:00

- Data Engineering on Google Cloud Course (Intensive Formula) – 09/06/2025 – 09:00 – 17:00

Steps to Enroll

Registration takes place by asking to be contacted from the following link, or by contacting the office at the international number +355 45 301 313 or by sending a request to the email info@hadartraining.com