Serverless Data Processing with Dataflow

Google Cloud's Serverless Data Processing with Dataflow course is a training program designed for big data professionals who want to advance their Dataflow skills and improve their data processing applications. The course starts with the basics, showing how Apache Beam and Dataflow work together to solve data processing needs, avoiding the risk of single-vendor lock-in. Next, the course focuses on pipeline development, explaining how to transform business logic into data processing applications that can run on Dataflow. The final part of the course is dedicated to operations, where critical aspects of managing a data application on Dataflow, such as monitoring, troubleshooting, testing, and reliability, are addressed. During the course, participants will have the opportunity to familiarize themselves with technologies such as the Beam Portability Framework, Shuffle and Streaming Engine, Flexible Resource Scheduling, and IAM. Finally, the course helps prepare for the Google Professional Data Engineer Certification exam .

Course Objectives

Below is a summary of the main objectives of the Serverless Data Processing with Dataflow Course :

- Understand the integration and use of Apache Beam with Dataflow.

- Develop scalable, serverless data pipelines.

- Apply operational practices for managing Dataflow applications.

- Use technologies such as Beam Portability Framework and Flexible Resource Scheduling.

- Prepare for the Google Professional Data Engineer Certification Exam

- Implement best practices for data pipeline design and optimization.

- Monitor and troubleshoot Dataflow jobs using Google Cloud’s tools.

- Leverage Dataflow’s autoscaling and fault-tolerance features.

Course Certification

This course helps you prepare to take the:

Google Cloud Certified Professional Data Engineer Exam;

Course Outline

Module 1: Introduction

- Course Introduction

- Beam and Dataflow Refresher

Module 2: Separating Compute and Storage with Dataflow

- Dataflow

- Beam Portability

- Runner v2

- Container Environments

- Cross-Language Transforms

Module 3: Dataflow, Cloud Operations

- Dataflow Shuffle Service

- Dataflow Streaming Engine

- Flexible Resource Scheduling

Module 4: IAM, Quotas, and Permissions

- IAM

- Quota

Module 5: Security

- Data Locality

- Shared VPC

- Private IPs

- CMEK

Module 6: Beam Concepts Review

- Beam Basics

- Utility Transforms

- Document Lifecycle

Module 7: Windows, Watermarks, Triggers

- Windows

- Watermarks

- Triggers

Module 8: Sources and Sinks

- Sources and Sinks

- Text IO and File IO

- BigQuery IO

- PubSub I

- Kafka IO

- Bigtable IO

- I will have

- Splittable DoFn

Module 9: Schemas

- Beam Schemas

- Code Examples

Module 10: State and Timers

- State API

- Hours API

Module 11: Best Practices

- Schemas

- Handling unprocessable Data

- Error Handling

- AutoValue Code Generator

- JSON Data Handling

- Utilize DoFn Lifecycle

- Pipeline Optimizations

Module 12: Dataflow SQL and DataFrames

- Dataflow and Beam SQL

- Windowing in SQL

- Beam DataFrames

Module 13: Beam Notebooks

- Beam Notebooks

Module 14: Monitoring

- Job List

- Job Info

- Job Graph

- Job Metrics

- Metrics Explorer

Module 15: Logging and Error Reporting

- Logging

- Error Reporting

Module 16: Troubleshooting and Debug

- Troubleshooting Workflow

- Types of Troubles

Module 17: Performance

- Pipeline Design

- Data Shape

- Source, Sinks, and External Systems

- Shuffle and Streaming Engine

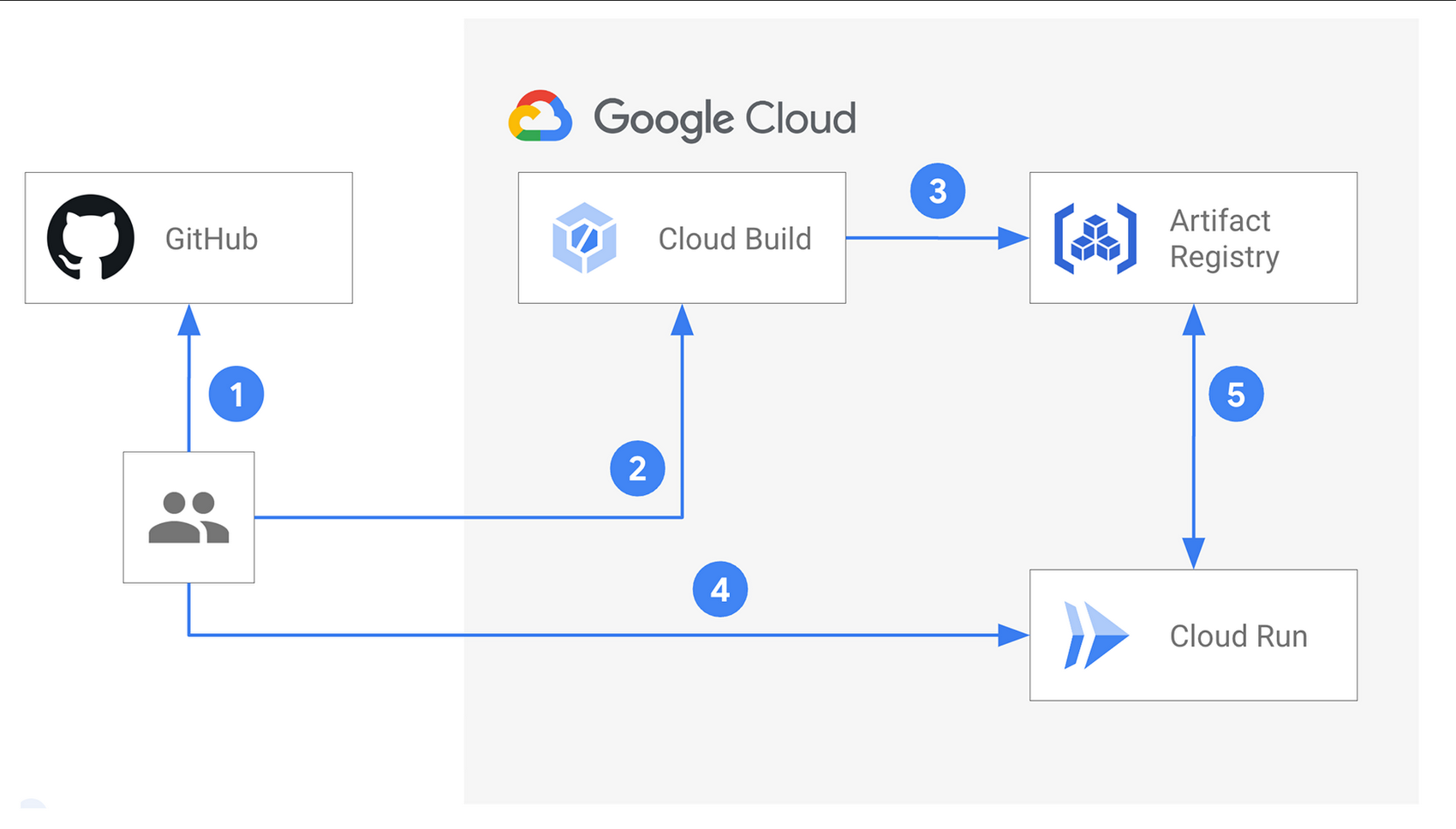

Module 18: Testing and CI/CD

- Testing and CI/CD Overview

- Unit Testing

- Integration Testing

- Artifact Building

- Deployment

Module 19: Reliability

- Introduction to Reliability

- Monitoring

- Geolocation

- Disaster Recovery

- High Availability

Module 20: Flex Templates

- Classic Templates

- Flex Templates

- Using Flex Templates

- Google-provided Templates

Course Mode

Instructor-Led Remote Live Classroom Training;

Trainers

Trainers are GCP Official Instructors and certified in other IT technologies, with years of hands-on experience in the industry and in Training.

Lab Topology

For all types of delivery, the Trainee can access real Cisco equipment and systems in our laboratories or directly at the Cisco data centers remotely 24 hours a day. Each participant has access to implement the various configurations thus having a practical and immediate feedback of the theoretical concepts.

Here are some Labs topologies available:

Course Details

Course Prerequisites

Participation in the Data Engineering on Google Cloud course is recommended .

Course Duration

Intensive duration 3 days

Course Frequency

Course Duration: 3 days (9.00 to 17.00) - Ask for other types of attendance.

Course Date

- Serverless Data Processing with Dataflow Course (Intensive Formula) – 14/04/2025 – 09:00 – 17:00

- Serverless Data Processing with Dataflow Course (Intensive Formula) – 25/06/2025 – 09:00 – 17:00

Steps to Enroll

Registration takes place by asking to be contacted from the following link, or by contacting the office at the international number +355 45 301 313 or by sending a request to the email info@hadartraining.com